The case against human judgement

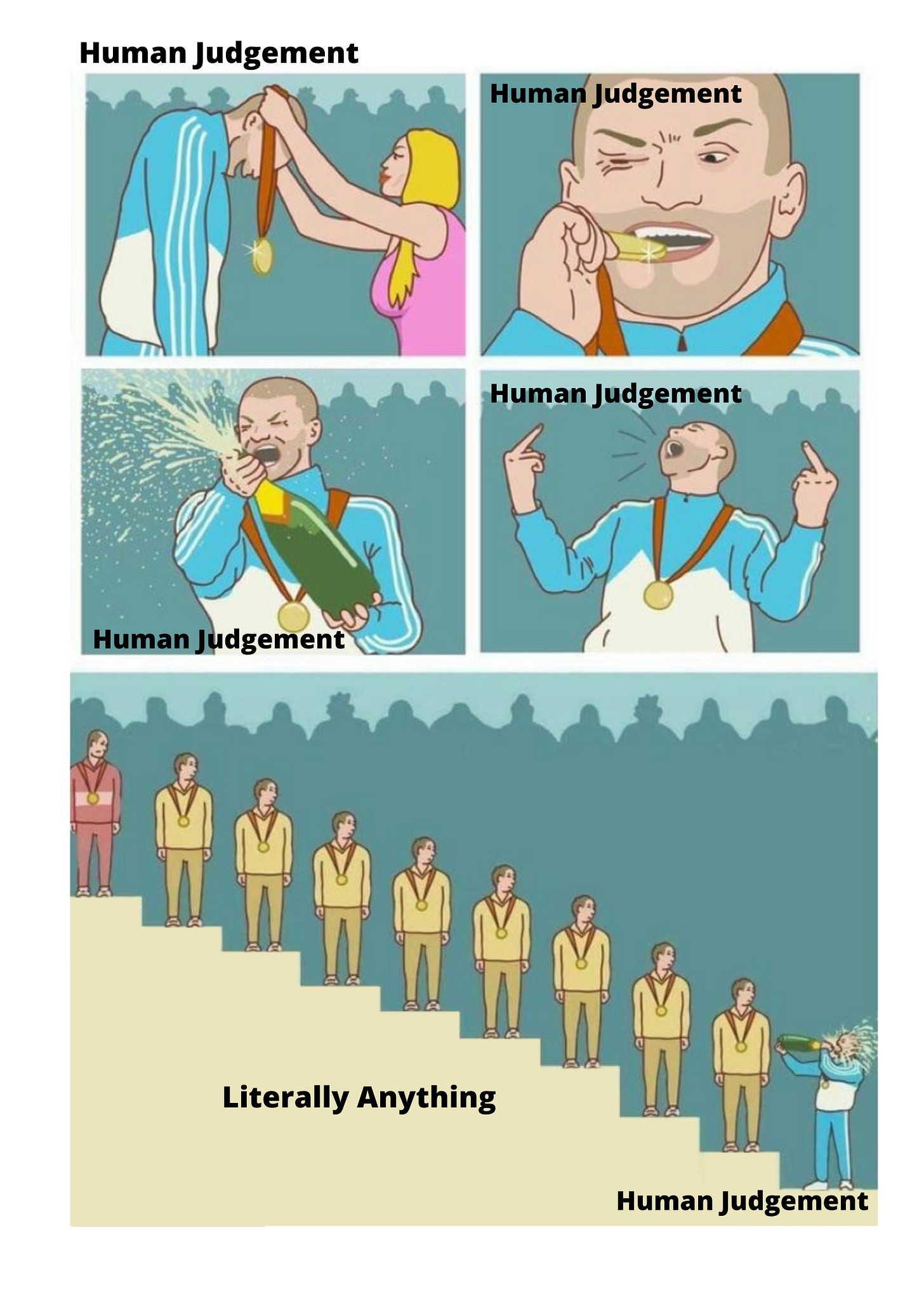

As most of you know, I am a big fan of trying to measure things, specifically measuring and predicting people’s performance. However, whenever I have these conversations with people the most common push back I get is “human judgement is the best”, or something along those lines. I get why people say it, but I don’t get why people genuinely believe it. In the case of measuring and predicting the performance of people, fears often lay in 3 areas:

Pattern matching

Bias

Bad incentives

But who said human judgement doesn’t do this already?

Pattern matching

Most humans pattern match. Even the humans who fund outliers for a living pattern match.

So when people push back against software for pattern matching, it is quite literally the kettle calling the pot black. Take Applicant Tracking Systems for example. They are a great example of software being used to badly pattern match and rightly so. But what’s the human alternative? Recruiters do the exact same thing..but slower. In some cases it’s even worse because you have to “network” beforehand with said recruiter so that they show some favouritism towards you. Who wants that?

Unlike humans however, software can and is improving. Whereas software has the potential to process millions of data points about an individual every single time, humans do not. We have reached our limit, software is nowhere near it.

Bias

Bias in software is real, but if I had to bet on which would make the most improvement in 100 years I would choose software nine times out of ten. Our superiority complex brings about higher standards and more scrutiny of software, which I think is a good thing. It’s this scrutiny and high standard that makes bias and poor performance in software extremely obvious. And it’s this obviousness that forces us to significantly improve our software very quickly. Human judgement however, severely lacks this feedback loop. Our overestimation of our abilities produces lower standards and less scrutiny, making our problems much less obvious, which leads to very little improvement being made.

I understand the reluctance around having software measure and sort people. Trust me, I get it. However, I am already being discriminated against without software. So if there is a very real chance of creating a fairer measurement system for the masses, then we should take it. Even if it means things have to get worse before they get better.

Bad incentives

What you measure dictates behaviour, so I understand the apprehensiveness towards software trying to do this. What I disagree with however, is that the default state (human judgement) doesn’t already do what we fear the most - have a measurement system in place that creates bad incentives. As long as you are rewarding people, hiring people or doing anything that involves sorting people, you (by default) have a measurement system in place and therefore already have good and/or bad incentives at play.

Push back against software often focuses on the fact that it doesn’t capture the entirety of an individual and their work, therefore creating bad incentives, whereas human judgement supposedly does. In reality, the measurement of people within companies is nowhere near as sophisticated as everyone thinks it is. In fact it is often based on visibility, projects, likability, politics and memory. You make yourself extra visible in the office or online, and work on projects you pretty much know will be successful so that it can rightly or wrongly be attributed to your efforts. You force yourself to be extra likeable, attending all socials, just so you seem like a warm and eager team player. You are strategic with your involvement in office politics because you know it influences your career, whether you participate in it or not. And finally you hope and pray your manager and colleagues remember your performance for the last 6 months by providing not so subtle cues of your accomplishments during performance review season.

Given what we have established, why shouldn’t we try and build new software solutions? I totally understand and agree that these solutions could end up being worse, and in fact I am not a fan of most solutions when it comes to talent identification (apart from TripleByte). That said, just because things could get worse, does not mean things are not already pretty bad. And oftentimes the solutions we dislike are simply more beefed up versions of what we already do.

It’s time we pass the baton. Humans have had years to improve our methods of measuring people and we are still quite poor at it. At some point we have to look at our track record and acknowledge that we probably aren’t going to get (significantly) better anytime soon.